(Gorodenkoff/Shutterstock)

Solving big data problems often requires developing new computing approaches and new technologies. But sometimes, the newer technologies and techniques create additional problems that didn’t previously exist. One upcoming big data analytics vendor that’s found a happy medium balancing new tech and proven techniques is Ocient.

Ocient was founded in 2016 by a group of technologists led by Chris Gladwin, who was the founder of object storage vendor Cleversafe, which IBM bought in 2015 for $1.4 billion. Back then, big data lakes built on distributed file systems like HDFS and object storage systems like S3 were considered cutting edge. Similarly, many companies were told that the best way to scale big data workloads was to separate the compute and storage layers, which allowed them to scale independently.

Data was so massive, we were told, that one had to centralize the data, preferably in the cloud, and bring compute to the storage. The storage media underlying HDFS or S3–and which most cloud data warehouses, like Snowflake and Redshift, are designed to use–was invariably spinning disk, which even today is the cheapest form of online storage.

But Gladwin and his crew had a different take on the situation. They saw the spinning disk that AWS was investing so heavily in as an impediment to progress. One could run massive SQL analytics jobs by sending data across NICs to the storage layer, but it wouldn’t necessarily be the fastest nor the cheapest approach.

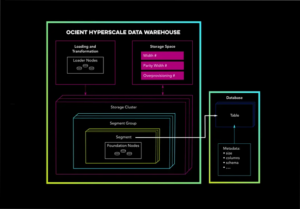

Instead, Ocient developed its own analytics database with a new architecture that’s designed around NMVe drives. And instead of separating compute and storage, Ocient’s design brought the two back together. Those two architectural design points allowed Ocient to deliver big performance gains on some of the toughest big data challenges, according to George Kondiles, Ocient’s co-founder and chief architect.

“When data get very large, in the petabytes and above, with tens to hundreds of trillions of records, what we see, what we believe to be true, is that the abstraction layer that exists between the storage and the compute is a substantial impediment to realizing huge performance gains on the queries, comparatively speaking, on that data,” Kondiles said. “We work very closely with eliminating all those abstraction layers so that we’re able to just basically talk directly to the data, read directly from the data in situ, do as much of the analysis as we can with these really wider pipes right off the boxes on these really large data sets.”

A typical NVMe drive can read data at speeds up to 3,000 MB per second and 200,000 IOPS with direct connections to the PCIe bus. A 10,000 RPM spinning disk, on the other hand, can read data at speeds up 250 MB per second and deliver maybe 160 IOPS. When compute and storage are disconnected, as is the fashion, there’s additional network latency.

Ocient’s focus on utilizing NVMe drives gave it a big performance boost over data lakes, which invariably use spinning disk. While NVMe drives are more expensive than spinning disk, they can access data 30x faster or more, which give them a huge performance advantage. For certain types of always-on big data workloads, the speedup that Ocient’s approach is well worth any extra costs that may result from having to buy a large number of NVMe drives and running them in an on-prem fashion.

Back in 2016, few analytics database vendors were developing databases with NVMe in mind. Ocient sensed an opportunity. “We were all in on this NVMe drive concept very early on based on just us noticing that the existing database software that was out there wasn’t necessarily capitalizing on the kind of relatively novel capabilities that the drives have,” Kondiles told BigDATAwire in a recent interview. “And that was why we leaned in on it.”

That’s not to say that data lakes running on object storage don’t have their place. Companies that can’t predict what their analytical needs are going to be will benefit from the additional elasticity that the separation of compute and storage bring. But for certain types of always-on OLAP workloads–the sort that involve tens of petabytes of data and hundreds of trillions of records–the overhead incurred by accessing HDDs over the network in a data lake setting is just too much.

“The sorts of problems that we’re targeting and trying to solve, we see some really substantial performance improvements, cost improvements…exactly for those sorts of data that the data lake approach doesn’t necessarily always have the best results,” Kondiles said. “In some scenarios, there’s a lot of value to be had by keeping them separate. And in others, there’s a lot of value to not necessarily try to force a square peg in a round hole.”

Ocient caters to companies with some of the biggest big data requirements, such as telcos, ad tech firms, governmental agencies, financial services, and enterprises with large-scale observability workloads. Many of Ocient’s customers run their Ocient clusters on-prem, although there’s nothing to prevent the Ocient software from being run in the cloud, which some customers do.

Co-locating compute and storage reduces costs, but brings ancillary benefits too, Kondiles said. “We were focusing primarily on performance and cost effectiveness,” he said. “But it’s also space reduction and energy reduction, because you’re taking what was a bunch of storage nodes and a bunch of compute nodes and you combine them together into a single set of nodes, and the result is lower data center footprint and lower power utilization.”

Ocient’s analytics database is built on the relational model and uses standard ANSI SQL to access data. On top of that, it adds time-series and geospatial components, which invariably are important in the sort of massive IoT- and senor-generated data sets that Ocient customers want to crunch. It also includes some machine learning primitives that allow customers to run predictive analytic functions.

But Ocient’s database isn’t your garden variety SQL store. For instance, the company has built erasure coding directly into its query engine, which allows it to minimize the amount of duplicate data that customers store while retaining the capability to do a full recovery in the event of drive losses. That’s an example of Ocient borrowing ideas from object store vendors.

Here’s another area where Ocient zigs while the rest of the industry zags: secondary indexes.

“It is something that a lot of the bigger names kind of moved away from just because of the perceived complexity of managing a schema and the types of queries and whatever else,” Kondiles said. “And what we found is that, especially at these scales, the secondary indexes can be crucial for achieving reasonable either execution times or costs for the system simply because the data volume is so high.”

Ocient has taken a pragmatic approach to how it develops its software. It supports newer technologies, such as NVMe and erasure encoding, while simultaneously adopting older architectures, like secondary indexes and co-located compute and storage, when it makes sense to do so.

The approach seems to be reasoning. The company said last week that bookings over the first five months of 2025 were nearly triple the rate of last year. In April, the Chicago-based company announced the closure of its $42.1 million Series B round, bringing the company’s total venture investment to $132 million.

The company is now in expansion mode and seeking to grow revenues. As part of that drive, last week Ocient brought in John Morris to be its new CEO, replacing Gladwin in the corner suite. Morris and Gladwin worked together previously at Cleversafe, where Morris was brought in as CEO prior to the IBM acquisition.

“I could not be more thrilled to welcome John as he takes the helm as CEO,” said Gladwin, who is now Ocient’s executive chairman. “His operational and strategic leadership come at a pivotal time for Ocient, much like when he joined Cleversafe and helped drive tripled revenues, which resulted in the company’s $1.4B acquisition and 10x returns for investors.

Will history repeat itself? Only time will tell.

Related Items:

Hyperscale Analytics Growing Faster Than Expected, Ocient Says

Ocient Report Chronicles the Rise of Hyperscale Data

The Network is the New Storage Bottleneck