(Jonathan-Karwacki/Shutterstock)

Databricks today launched Agent Bricks, a new offering aimed at helping customers AI agent systems up and running quickly, with the cost, safety, and efficiency they demand.

Many companies are investing large resources into agentic AI. While the potential payoff through automation is large, they are finding that the actual process of building and deploying agents is quite difficult.

“You can give everybody the tools they need to build agents,” Databricks Vice President of Marketing Joel Minnick says. “But the actual process of getting agents to production could still be a lot easier for many customers.”

There’s not a problem with the AI models themselves. Rather, the issue is with ensuring the quality and cost of the AI models will meet expectations, Minnick says.

Databricks saw three main problems. The first one was the lack of a good way to evaluate AI applications on the sort of real-world workloads that customers want to run.

“Model providers will boast the latest and greatest version of this model can answer Math Olympiad style questions, or it’s really great at vibe coding,” Minnick tells BigDATAwire. “But that’s not reflective of a real life problem.”

For instance, if a company was trying to build a product recommendation agent, the agent might recommend a product that doesn’t exist, refuse to acknowledge an actual product that does exist, or even recommend a customer purchase a competitor’s product. “So these are the kinds of actual evaluation problems customers are running into,” Minnick says.

Another issue is data availability. While companies may have large amounts of data in total, they might lack a sufficient data to train an agent to do a specific task. It can also take a large number of tokens to train models on their data, and the cost of those tokens can add up.

Lastly, Databricks saw that optimization was an unsolved issue. Solving for quality and efficiency requires balancing competing demands, and that can take some degree of effort and sophistication, particularly when the underlying models from providers are changing on a monthly basis.

“It is a Herculean task to keep up with the latest and greatest research of optimization techniques,” Minnick says. “It’s hard, even for a company like Databricks. So for the average enterprise to keep up, it often just kind of became what feels right, but often feeling like I don’t think I’ve actually found the best scenario yet.”

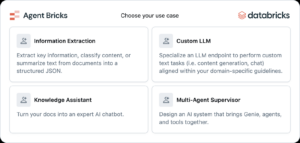

Agent Bricks attempts to solve these three AI challenges–evaluation, data availability, and optimization–within the context of specific use cases, including structured information extraction, reliable knowledge assistance, custom text transformation and orchestrated multi-agent systems.![]()

The offering uses research techniques developed by Mosaic AI Research to build the AI evaluation system that will help users tailor their models and agents to the task and data at hand. Agent Bricks will automatically generate a set of judges that will be used to test and evaluate the customers AI agents.

While the product can drive the evaluation process, customers can have full control over the specific configurations and criteria used, Minnick says. “You have full control over editing those, adding to those, taking things away, making sure they feel like exactly what you want these judges to be evaluating the agent against,” he says.

If the customer doesn’t have sufficient data to train an agent, Agent Bricks can generate synthetic data to be used for training. On the optimization front, the software can apply different techniques to find the right balance between quality and cost.

“We give you scorecards… [that] say, I used Lambda four plus these optimization techniques, I got to 95% quality across the different checks that we agreed is appropriate for this. And I did it at this cost. In this other run, I used Claude and I used these optimization techniques and I got to 90% qual

ity but 3x lower cost to operate this model,” Minnick says. “So we give customers a lot of choice around, for this given use case, where do I want to fall on that quality versus cost curve, and be able to be able to get to those things production much, much faster.”

Databricks isn’t new to machine learning model evaluation. The company has been supporting classical ML workflows around things like clustering and classification for many years. What’s different about the world of generative AI and agentic AI is that the evaluation process is much more organic, and requires a more flexible approach, Minnick says.

“How you evaluate them has to be a lot more fine-grained on understanding what quality actually looks like,” he says. “And that’s why these judges are so important, to be able to understand, okay, what exactly do I think this agent is going to have to encounter in the real world?…What do I think good looks like? And really understands what do I think the right response is?”

Agent Bricks is in beta now. One early tester was AstraZeneca, which took about 60 minutes to build a knowledge extraction agent that is capable of extracting relevant information from 400,000 research documents, Minnick says.

“For the first time, businesses can go from idea to production-grade AI on their own data with speed and confidence, with control over quality and cost tradeoffs,” Ali Ghodsi, CEO and Co-founder of Databricks stated. “No manual tuning, no guesswork and all the security and governance Databricks has to offer. It’s the breakthrough that finally makes enterprise AI agents both practical and powerful.”

Databricks made the announcement at its AI + Data Summit, which is taking place this week in San Francisco.

Related Items:

Databricks Nabs Neon to Solve AI Database Bottleneck

Databricks to Raise $5B at $55B Valuation: Report

It’s Snowflake Vs. Databricks in Dueling Big Data Conferences